Uncertain Climate Predictions and Certain Energy Progress – Part 3

Computer models claiming accurate predictions of future temperatures should contain within them an accurate description of past temperatures. But they don’t.

In his book Unsettled: What Climate Science Tells Us, What It Doesn’t, and Why It Matters, Steven Koonin summarizes some of the inherent flaws of the computer models used to predict climate change. He writes:

[T]uning is a necessary, but perilous, part of modeling the climate, as it is in modeling of any complex system. An ill-tuned model will be a poor description of the real world, while overtuning risks cooking the books—that is, predetermining the answer. A paper co-authored by fifteen of the world’s leading climate modelers put it this way: “Choices and compromises made during the tuning exercise may significantly affect model results … In theory, tuning should be taken into account in any evaluation, intercomparison, or interpretation of the model results … Why such a lack of transparency? This may be because tuning is often seen as an unavoidable but dirty part of climate modeling, more engineering than science, an act of tinkering that does not merit recording in the scientific literature. There may also be some concern that explaining that models are tuned may strengthen the arguments of those claiming to question the validity of climate change projections. Tuning may be seen indeed as an unspeakable way to compensate for model errors.” … Because no one model will get everything right, the assessment reports average results from an “ensemble” made up of a few dozen different models from research groups around the world … The implication is that the models generally agree. But that isn’t at all the case. Comparisons among models within any of these ensembles show that, on the scales required to measure the climate’s response to human influences, model results differ dramatically both from each other and from observations. But you wouldn’t know that unless you read deep into the IPCC report. Only then would you discover that the results being presented are “averaging” models that disagree wildly with each other. (By the way, the discordance among the individual ensemble members is further evidence that climate models are more than “just physics.” If they weren’t, multiple models wouldn’t be necessary, as they’d all come to virtually the same conclusions.) One particularly jarring failure is that the simulated global average surface temperature (not the anomaly) varies among models by about 3ºC (5.6ºF), three times greater than the observed value of the twentieth-century warming they’re purporting to describe and explain. And two models whose average surface temperatures differ by that much will vary considerably in their details … So here is a real surprise: even as the models became more sophisticated—including finer grids, fancier subgrid parametrizations, and so on—the uncertainty increased rather than decreased. Having better tools and information to work with should make the models more accurate and more in line with each other. That this has not happened is something to keep in mind whenever you read “Models predict …” The fact that the spread in their results is increasing is as good evidence as any that the science is far from settled.

And as different computer models increasingly vary in the spread of their predictions, they fail even to, when run backwards, show temperature changes we know occurred in the past. As Koonin writes:

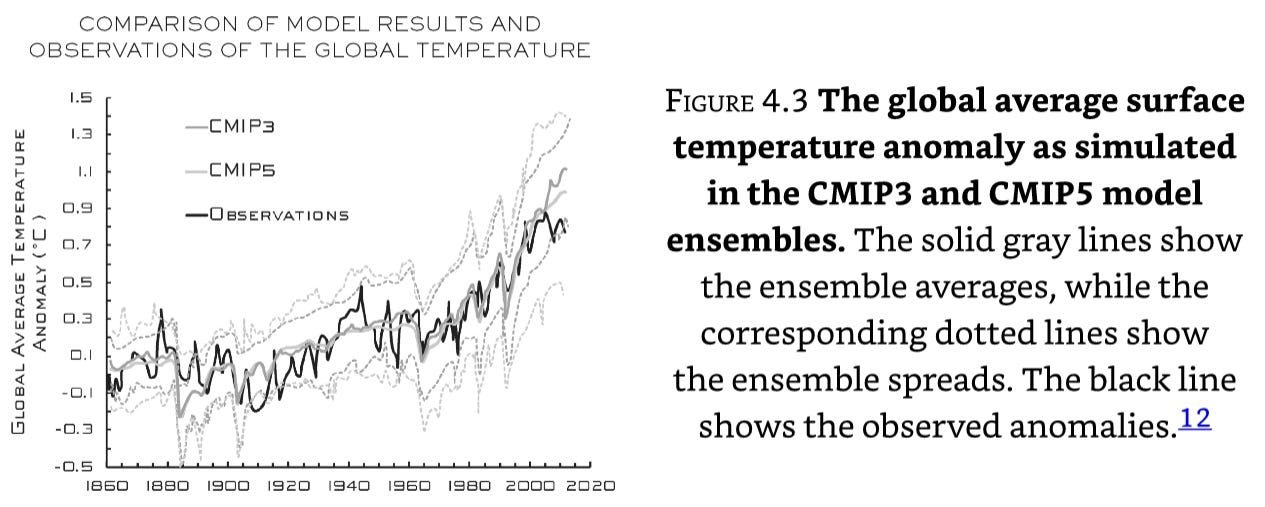

[A]nother equally serious issue is also illustrated here: Figure 4.3 shows that the ensembles [of computer models] fail to reproduce the strong warming observed from 1910 to 1940. On average, the models give a warming rate over that period of about half what was actually observed … Because we have only about 150 years of good observations, systematic behaviors that occur over longer timescales are less well known—there could be (and almost certainly are) other natural cyclic variations occurring over even longer periods. Cycles like these influence global and regional climates and are superimposed upon any trends due to human or natural forcings like greenhouse gas emissions or volcanic aerosols. They make it difficult to determine which observed changes in the climate are due to human influences and which are natural …

An analysis of 267 simulations run by twenty-nine different CMIP6 models created by nineteen modeling groups around the world shows that they do a very poor job of describing warming since 1950 and continue to underestimate the rate of warming in the early twentieth century. The failure of even the latest models to warm rapidly enough in the early twentieth century suggests that it’s possible, even likely, that internal variability—the natural ebbs and flows of the climate system—has contributed significantly to the warming of recent decades. That the models can’t reproduce the past is a big red flag—it erodes confidence in their projections of future climates. In particular, it greatly complicates sorting out the relative roles of natural variability and human influences in the warming that has occurred since 1980.

And as we explored in a previous essay, these vast differences in predictions go back to the fact that the size of the grids used in these computer models are too large to accurately predict the formation of clouds, which occurs subject to processes that happen at a much smaller scale. As Koonin writes:

These higher sensitivities seem to arise from these models’ subgrid representations of clouds and their interaction with aerosols. As one of the lead researchers said: “Cloud-aerosol interactions are on the bleeding edge of our comprehension of how the climate system works, and it’s a challenge to model what we don’t understand. These modelers are pushing the boundaries of human understanding, and I am hopeful that this uncertainty will motivate new science.”

Oddly, a recent National Academies’ report noted: “The uncertainties in modeling of both climate change and the consequences of albedo modification [that is, modifying the planet to increase the earth’s reflectivity] make it impossible today to provide reliable, quantitative statements about relative risks, consequences, and benefits of albedo modification to the Earth system as a whole, let alone benefits and risks to specific regions of the planet.” Yet, as Koonin points out, “If ‘the uncertainties in modeling’ mean these models can’t give us useful information about what albedo modification might do, it’s hard to see why they would be any better at predicting the response to other human influences,” or even to the effects of increased cloud cover that might naturally occur.

And a close analysis of various Intergovernmental Panel on Climate Change reports show they contain admissions of “low confidence” in the predictions contained therein, including (from the IPCC’s AR5 [Fifth Assessment] WGI [Working Group I] report:

“… low confidence regarding the sign of trend in the magnitude and/or frequency of floods on a global scale.” [IPCC. AR5 WGI Section 2.6.2.2]

“… low confidence in a global-scale observed trend in drought or dryness (lack of rainfall) since the middle of the 20th century …” [IPCC. AR5 WGI Section 2.6.2.3.]

The Executive Summary of Chapter 3 of the IPCC’s 2012 Special Report on Extreme Events (SREX) itself states:

Many weather and climate extremes are the result of natural climate variability (including phenomena such as El Niño), and natural decadal or multi-decadal variations in the climate provide the backdrop for anthropogenic [human-caused] climate changes. Even if there were no anthropogenic changes in climate, a wide variety of natural weather and climate extremes would still occur.

With all that admitted uncertainty, albeit most often buried deep in the text of official reports, how do most people end up with a much more certain sense of impending climatic doom? That will be the subject of the next essay in this series.

Finally, beyond uncertainty, Roger Pielke, Jr., summarizes some of the more egregious outright errors made by the United Nations in its reports on climate change here.

Links to all essays in this series: Part 1; Part 2; Part 3; Part 4; Part 5; Part 6; Part 7; Part 8; Part 9.