“Disparate Impact” – Part 8

False claims that disparate impacts are due to racism help obscure real progress among all people.

As John Tierney and Roy Baumeister write in their book The Power of Bad:

The standard of living for the masses remained essentially unchanged until a revolutionary set of ideas and institutions arose in Europe. The fall of the Roman Empire left the continent decentralized with independent fiefdoms where scholars, inventors, and merchants could share knowledge and innovate without imperial interference. The medieval era, formerly mislabeled the “Dark Ages” by nostalgists for the leisure- class splendors of Rome, was actually “one of the great innovative eras of mankind,” in the words of Jean Gimpel, the French historian. He calls it the first industrial revolution. While the Roman economy had been powered by slave labor, medieval engineers tapped natural forces by building dams and new efficient waterwheels throughout Europe. Windmills proliferated and were used to drain the coastal regions of the Low Countries. Germanic “barbarians” developed a much- improved form of steel. The Vikings made major advances in shipbuilding and navigation. Mechanical clocks and eyeglasses were introduced. Agricultural productivity surged thanks to advances in crop rotation and the invention of the harrow and a new heavy plow, so the average person was better fed and healthier than during Roman times. Medieval monasteries promoted research and entrepreneurship as they produced and marketed products much as modern corporations do, with the abbots serving as CEOs. Traders and bankers in the city- states of northern Italy led a commercial revolution, an international exchange of goods and ideas that sparked the Renaissance. When the Italian city- states fell under the sway of foreign monarchs, the merchants and artists moved to the Low Countries. Then, after the burghers were stymied by Hapsburg rulers, the entrepreneurial capital shifted to Britain, where the monarch’s power was constrained by law, and it was there that scientists, engineers, and capitalists collaborated to start the Industrial Revolution. This was the first truly golden age: the Great Enrichment, as the economist Deirdre McCloskey has dubbed the astonishing burst of prosperity and expansion of freedom in the nineteenth and twentieth centuries. The average human’s income, after stagnating for millennia, increased tenfold in just two centuries. The Industrial Revolution made possible the vast creation of wealth without conquest or enslavement. Philosophers and theologians had long recognized the moral evil of slavery, but the movement to abolish it gained strength only when there were machines available to do the work instead. New technologies were commercialized for the masses, easing burdens for everyone and turning commoners into a bourgeois class with the power to demand new universal rights and freedoms. Two centuries later, the majority of humanity lives in democratic countries that keep growing more prosperous. The Four Horsemen have never posed so little threat. Death has been forestalled by the greatest miracle in the history of the human species: the doubling of life expectancy since the Industrial Revolution. War still ravages some countries, but as Steven Pinker has documented, we are living in what is probably the most peaceful era in history. Never before has the average person faced such a small threat of dying in war or from other forms of violence. The incentive for future war has diminished because there’s no longer an imperative to seize wealth or farmland from neighboring countries. Despite population growth, farmland in North America and Europe has long been reverting to forests and grasslands because farmers can feed more people on less land, and that trend is projected for the rest of the world, too. Famine and Pestilence afflict fewer people than ever before. Last century it was estimated that only half of the world’s people were adequately nourished; today nearly 90 percent are. (The biggest nutritional problem in many places is now obesity.) There has been so much global progress against disease since 1950 that life expectancy in poor countries has increased by about thirty years— the most rapid increase in history. Rates of literacy and education have risen around the world, and people enjoy unprecedented leisure. In the middle of the nineteenth century, the typical man in Britain worked more than sixty hours a week, with no annual vacation, from age ten until he died in his fifties. Today’s workers enjoy three times as much leisure over the course of their lives: a gift of two hundred thousand extra hours of free time.

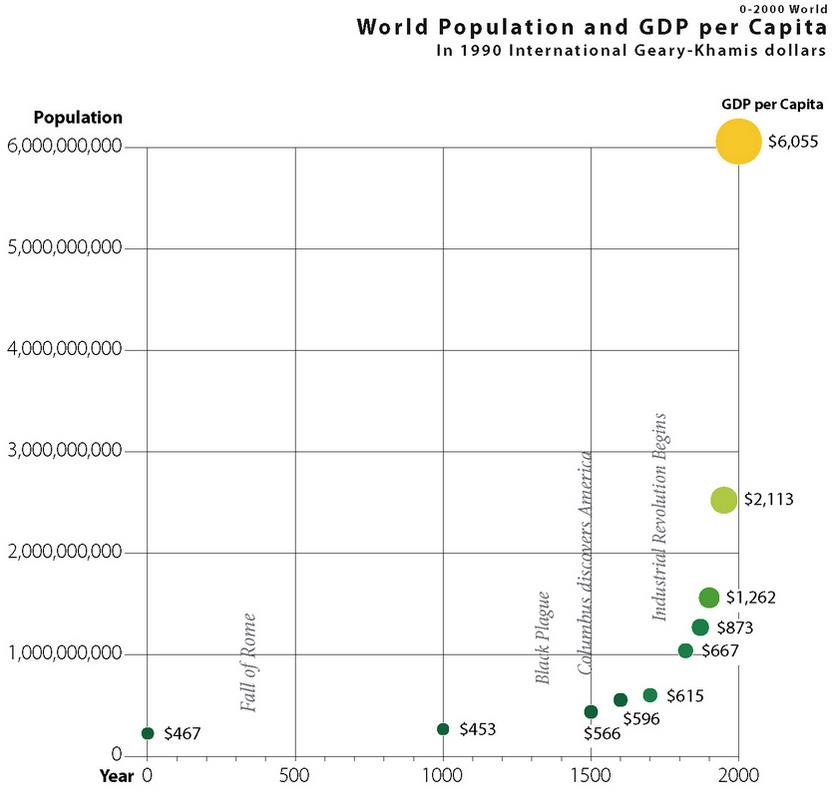

Since the Industrial Revolution, the world has become much more economically rich and equal. For thousands of years, the world population and gross domestic product (GDP, the sum total of all the goods and services a society produces) remained essentially flat per capita. And then it took off after the Industrial Revolution.

According to Brookings Institution scholar Homi Khara, “There was almost no middle class before the Industrial Revolution began in the 1830s,” Kharas said. “It was just royalty and peasants. Now we are about to have a majority middle-class world,” with “middle class” meaning people who have enough money to cover basics needs, such as food, clothing and shelter, and still have enough left over for a few luxuries, such as fancy food, a television, a motorbike, home improvements or higher education.

The following charts show how life has improved in various other ways over the last 200 years.

As Steven Pinker points out, gross domestic product “correlates with every indicator of human flourishing” including longevity, health, nutrition, peace, freedom, human rights, tolerance, and even self-reported happiness levels.” That’s why government policies should be reasonably geared toward increasing GDP.

Pretty good, right? Of course. But as John Tierney and Roy Baumeister write in their book The Power of Bad:

Just about every measure of human well-being has improved except for one: hope. The healthier and wealthier we become, the gloomier our worldview. In international surveys, it’s the richest people who sound the most pessimistic— and also the most clueless. Most respondents in developing countries like Nigeria and Indonesia know that living conditions have improved around the world, and they expect further improvement in the coming decades. But most respondents in affluent countries don’t share that optimism because they don’t realize how much progress is being made. In the past two decades, the rate of child mortality in developing countries has been halved, and the global poverty rate has been reduced by two-thirds, but most North Americans and Europeans think these rates have remained steady or gotten worse. When they’re asked if the world is getting better or worse, or staying the same, fewer than 10 percent say that it’s getting better. By an overwhelming majority, they’re convinced it’s getting worse. We’ve escaped the Four Horsemen, but our brains are still governed by the negativity bias. We react in accordance with an old proverb: No food, one problem. Much food, many problems. We discover First World problems and fret about remote risks. We start seeing problems even when they aren’t there, a propensity that was nicely demonstrated in 2018 by the social psychologist Daniel Gilbert and colleagues. The researchers showed people a series of colored dots and asked them to decide whether each one was blue or not. As the series proceeded, the prevalence of blue dots declined, but people were determined to keep seeing the same number anyway, so they’d start mistakenly classifying purple dots as blue. The same thing happened when people were shown a series of faces and asked to identify the ones with threatening expressions. As the prevalence of threatening faces declined, people would compensate by misclassifying neutral faces as hostile. In a final test, people were asked to evaluate a series of proposals for scientific studies— some clearly ethical, some ambiguous, some clearly unethical— and reject the unethical ones. Yet again, as the prevalence of unethical proposals declined, people compensated by rejecting more of the ambiguous proposals. These mistakes happened even when people were explicitly warned ahead of time that the prevalence of the targets would decline, and even when they were specifically instructed to “be consistent” and offered cash bonuses for being accurate. Once they started looking for anomalies, they kept seeing them even as the anomalies were vanishing. Since our brains are primed to spot bad anomalies more readily than good anomalies, we keep imagining new problems even when life improves. As Gilbert notes, “When the world gets better, we become harsher critics of it, and this can cause us to mistakenly conclude that it hasn’t actually gotten better at all. Progress, it seems, tends to mask itself.”

The phenomenon of so-called “disparate impact” analysis, as we’re explored in previous essays, is a manifestation of this unfortunate tendency among some people to become increasingly harsher critics of an increasingly improving world. It’s part of what one researcher calls “prevalence-induced concept change.” As described in the Harvard Crimson:

In a series of studies, [Daniel] Gilbert, the Edgar Pierce Professor of Psychology, his postdoctoral student David Levari, and several other researchers show that as the prevalence of a problem is reduced, humans are inclined to redefine the problem. As a problem becomes smaller, conceptualizations of the problem expand, which can lead to progress being discounted … “Our studies show … that solving problems causes us to expand our definitions of them,” he said. “When problems become rare, we count more things as problems. Our studies suggest that when the world gets better, we become harsher critics of it, and this can cause us to mistakenly conclude that it hasn’t actually gotten better at all. Progress, it seems, tends to mask itself … We had volunteers look at thousands of dots on a computer screen one at a time and decide if each was or was not blue,” Gilbert said. “We lowered the prevalence of blue dots, and what we found was that our participants began to classify as blue dots they had previously classified as purple.” Even when participants were warned of the tendency, and even when they were offered money to avoid it, they continued to alter their definitions of blue. Another experiment, this one using faces, showed similar results. When the prevalence of threatening faces was reduced, people began to identify neutral faces as threatening.” Perhaps the most socially relevant of the studies described in the paper, Gilbert said, involved participants acting as members of an institutional review board, checking research methodology to ensure that scientific studies were ethical. “We asked participants to review proposals for studies that varied from highly ethical to highly unethical,” he said. “Over time, we lowered the prevalence of unethical studies, and sure enough, when we did that, our participants started to identify innocuous studies as unethical.”

(As with disparate impact claims, the concept of “microaggressions” – a tendency to find offensive elements in the most innocuous statements and actions of others -- is also a form of prevalence-induced concept change.)

But it gets even worse when it comes to disparate impact analysis. Even as humans may have a tendency to create perceptions of unending additional problems as more and more bigger problems are solved, some “disparate impacts” to certain groups can be shown statistically regardless of the actions taken to reduce disparate impacts. That is, additional disparate impacts can be created by the very process of trying to reduce other disparate impacts elsewhere.

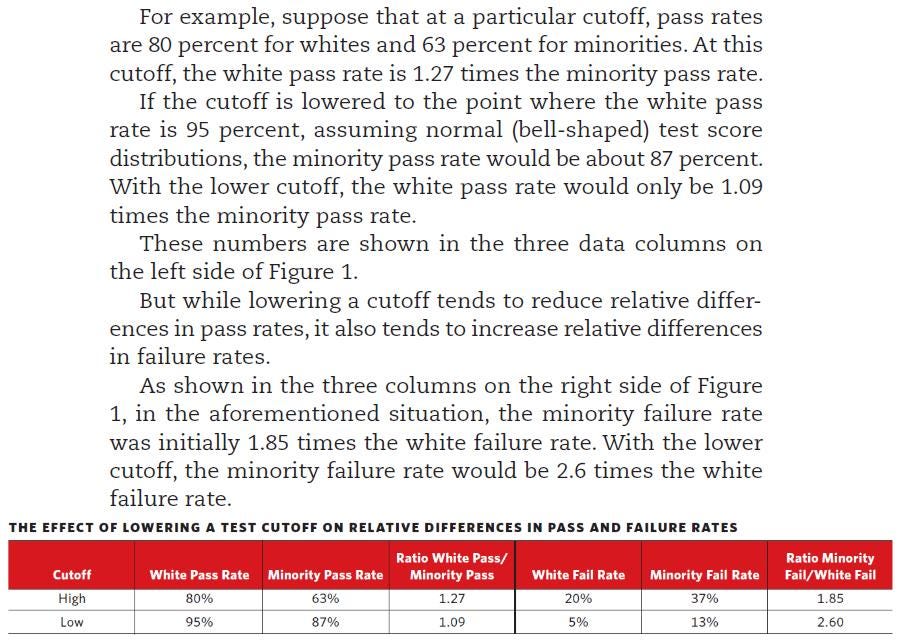

As James Scanlan has pointed out, when score standards are lowered, the ratio of majority and minority pass rates is lowered (which is the intent of many who want to lower standards), but at the same time, through mathematical and statistical laws, the ratio of majority and minority rejection rates gets larger as well. As Scanlan explains:

Proponents of “disparate impact” analysis thereby often insist on lowering standards (for the purpose of decreasing the percentage gap between minorities and non-minorities that exceed the standards), which lowers disparate impacts on pass rates, but at the same time disparate impacts on failure rates are increased (by increasing the percentage gap between minorities and non-minorities that fail to meet the standards). As a result, the impression is given that intentional discrimination may still be occurring (because a greater percentage of minorities are now failing to meet the standard) even though lowering the standards in the first place to reduce alleged discrimination and to increase minority pass rates was what resulted in the increased disparity in failure rates. In this way, government can end up creating a perpetual “appearance of discrimination” machine that gives the impression of constant and worsening discrimination when no discrimination may have been present in the first place.

As Thomas Sowell describes in his book Wealth, Poverty, and Politics: “Those who are in the business of protesting grievances are not going to stop protesting, or taking disruptive or violent action, because equality has been achieved by one definition, when equality by one definition precludes equality by some other definitions.”

In the next essay in this series, we’ll look at how a relatively small minority within one political party may be driving false perceptions of unending and ubiquitous racism.

Links to all essays in this series: Part 1; Part 2; Part 3; Part 4; Part 5; Part 6; Part 7; Part 8; Part 9