Randomness -- Part 6

The randomness of bureaucracies, graders and others, and how to live with them.

In this essay, we’ll further explore the role of randomness in people’s lives and focus on the randomness of bureaucracies, graders, and others, and how to live with them, using Nassim Taleb’s Fooled by Randomness: The Hidden Role of Chance in Life and Markets, and Leonard Mlodinow’s The Drunkard’s Walk: How Randomness Rules Our Lives.

Taleb writes of how bureaucrats are generally trained to ignore the presence of randomness:

Jean-Patrice [a stock trader who Taleb knew] was abruptly replaced by an interesting civil servant type who had never been involved in the randomness professions. He just went to the right civil servant schools where people learn to write reports and had some senior managerial position in the institution. As is typical with subjectively assessed positions he tried to make his predecessor look bad: Jean- Patrice was deemed sloppy and unprofessional. The civil servant’s first undertaking was to run a formal analysis of our transactions; he found that we traded a little too much, incurring very large back office expenditure. He analyzed a large segment of foreign exchange traders’ transactions, then wrote a report explaining that only close to 1% of these transactions generated significant profits; the rest generated either losses or small profits. He was shocked that the traders did not do more of the winners and less of the losers. It was obvious to him that we needed to comply with these instructions immediately. If we just doubled the winners, the results for the institution would be so great. How come you highly paid traders did not think about it before? Things are always obvious after the fact. The civil servant was a very intelligent person, and this mistake is much more prevalent than one would think. It has to do with the way our mind handles historical information. When you look at the past, the past will always be deterministic, since only one single observation took place. Now the civil servant called the trades that ended up as losers “gross mistakes,” just like journalists call decisions that end up costing a candidate his election a “mistake.” I will repeat this point until I get hoarse: A mistake is not something to be determined after the fact, but in the light of the information until that point.

Bureaucrats have an incentive to ignore randomness because the regulations bureaucrats issue are based on the assumption that they can actually change things for the better, when often they just shift risk around. For example, the accident at a nuclear power plant in 1979 was followed by a slew of regulations based on largely unfounded concerns. But those regulations have largely squelched the nuclear power industry ever since. That 1979 accident (which, incidentally, resulted in no deaths) was probably inevitable at some point anyway. As Mlodinow writes:

In March 1979 another famously unanticipated chain of events occurred, this one at a nuclear power plant in Pennsylvania. It resulted in a partial meltdown of the core, in which the nuclear reaction occurs, threatening to release into the environment an alarming dose of radiation. The mishap began when a cup or so of water emerged through a leaky seal from a water filter called a polisher. The leaked water entered a pneumatic system that drives some of the plant’s instruments, tripping two valves. The tripped valves shut down the flow of cold water to the plant’s steam generator—the system responsible for removing the heat generated by the nuclear reaction in the core. An emergency water pump then came on, but a valve in each of its two pipes had been left in a closed position after maintenance two days earlier. The pumps therefore were pumping water uselessly toward a dead end. Moreover, a pressure-relief valve also failed, as did a gauge in the control room that ought to have shown that the valve was not working. Viewed separately, each of the failures was of a type considered both commonplace and acceptable. Polisher problems were not unusual at the plant, nor were they normally very serious; with hundreds of valves regularly being opened or closed in a nuclear power plant, leaving some valves in the wrong position was not considered rare or alarming; and the pressure-relief valve was known to be somewhat unreliable and had failed at times without major consequences in at least eleven other power plants. Yet strung together, these failures make the plant seem as if it had been run by the Keystone Kops. And so after the incident at Three Mile Island came many investigations and much laying of blame, as well as a very different consequence. That string of events spurred Yale sociologist Charles Perrow to create a new theory of accidents, in which is codified the central argument of this chapter: in complex systems (among which I count our lives) we should expect that minor factors we can usually ignore will by chance sometimes cause major incidents … Called normal accident theory, Perrow’s doctrine describes how that happens—how accidents can occur without clear causes, without those glaring errors and incompetent villains sought by corporate or government commissions. But although normal accident theory is a theory of why, inevitably, things sometimes go wrong, it could also be flipped around to explain why, inevitably, they sometimes go right. For in a complex undertaking, no matter how many times we fail, if we keep trying, there is often a good chance we will eventually succeed. In fact, economists like W. Brian Arthur argue that a concurrence of minor factors can even lead companies with no particular edge to come to dominate their competitors. “In the real world,” he wrote, “if several similar-sized firms entered a market together, small fortuitous events—unexpected orders, chance meetings with buyers, managerial whims—would help determine which ones received early sales and, over time, which came to dominate. Economic activity is … [determined] by individual transactions that are too small to foresee, and these small ‘random’ events could [ac]cumulate and become magnified by positive feedbacks over time.”

Taleb also points out that whether the actions of government bureaucrats are beneficial or not is even harder to assess than it is in the private sector:

Outside the capitalistic system, presumed talent flows to the governmental positions, where the currency is prestige, power, and social rank. There, too, it is distributed disproportionately. The contributions of civil servants might be even more difficult to judge than those of the executives of a corporation—and the scrutiny is smaller. The central banker lowers interest rates, a recovery ensues, but we do not know whether they caused it or if they slowed it down. We can’t even know that they didn’t destabilize the economy by increasing the risk of future inflation. They can always fit a theoretical explanation, but economics is a narrative discipline, and explanations are easy to fit retrospectively.

Of course, just about all measurements are subject to some random error. As Mlodinow writes:

[I]f the Bureau of Labor Statistics measures the unemployment rate in August and then repeats its measurement an hour later, by random error alone there is a good chance that the second measurement will differ from the first by at least a tenth of a percentage point. Would The New York Times then run the headline “Jobs and Wages Increased Modestly at 2 P.M.”?

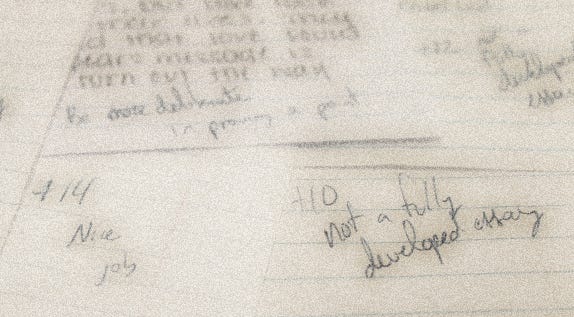

And when subjectivity enters into judgment, results can vary widely. Kids are also exposed to the randomness in life when they’re subjectively graded on their performance on various school projects. Mlodinow describes a story he was told by a friend:

[T]wo of his friends, he said, once turned in identical essays. He thought that was stupid and they’d both be suspended, but not only did the overworked teacher not notice, she gave one of the essays a 90 (an A) and the other a 79 (a C).

As Mlodinow continues:

Numbers always seem to carry the weight of authority. The thinking, at least subliminally, goes like this: if a teacher awards grades on a 100- point scale, those tiny distinctions must really mean something. But if ten publishers could deem the manuscript for the first Harry Potter book unworthy of publication, how could poor Mrs. Finnegan (not her real name) distinguish so finely between essays as to award one a 92 and another a 93? If we accept that the quality of an essay is somehow definable, we must still recognize that a grade is not a description of an essay’s degree of quality but rather a measurement of it, and one of the most important ways randomness affects us is through its influence on measurement. In the case of the essay the measurement apparatus was the teacher, and a teacher’s assessment, like any measurement, is susceptible to random variance and error … The uncertainty in measurement is even more problematic when the quantity being measured is subjective, like Alexei’s English-class essay. For instance, a group of researchers at Clarion University of Pennsylvania collected 120 term papers and treated them with a degree of scrutiny you can be certain your own child’s work will never receive: each term paper was scored independently by eight faculty members. The resulting grades, on a scale from A to F, sometimes varied by two or more grades. On average they differed by nearly one grade. Since a student’s future often depends on such judgments, the imprecision is unfortunate. Yet it is understandable given that, in their approach and philosophy, the professors in any given college department often run the gamut from Karl Marx to Groucho Marx.

Controls on subjective grading don’t yield much more consistent results. Mlodinow writes:

But what if we control for that— that is, if the graders are given, and instructed to follow, certain fixed grading criteria? A researcher at Iowa State University presented about 100 students’ essays to a group of doctoral students in rhetoric and professional communication whom he had trained extensively according to such criteria. Two independent assessors graded each essay on a scale of 1 to 4. When the scores were compared, the assessors agreed in only about half the cases. Similar results were found by the University of Texas in an analysis of its scores on college- entrance essays. Even the venerable College Board expects only that, when assessed by two raters, “92% of all scored essays will receive ratings within ± 1 point of each other on the 6- point SAT essay scale.”

But all this randomness doesn’t mean at all that people should give up trying. To the contrary, it means people should try more, and more often. As Taleb writes:

Let me make it clear here: Of course chance favors the prepared! Hard work, showing up on time, wearing a clean (preferably white) shirt, using deodorant, and some such conventional things contribute to success— they are certainly necessary but may be insufficient as they do not cause success. The same applies to the conventional values of persistence, doggedness and perseverance: necessary, very necessary … I am not saying that what your grandmother told you about the value of work ethics is wrong! Furthermore, as most successes are caused by very few “windows of opportunity,” failing to grab one can be deadly for one’s career. Take your luck!

Indeed, the vast benefits we all derive from free market competition, as explored in a previous essay, rely on large segments of the population’s being willing to take risks. And the courage to take risks comes from our own innate understanding regarding to what extent we can direct our own success in life – and the greater one’s sense of control, the better one’s sense of well-being (as explored in a previous essay series on locus of control).

Even as we all live as butterflies in the “Butterfly Effect,” that just goes to show the futility of attempts to micro-manage people’s lives. As Mlodinow writes:

In the 1960s a meteorologist named Edward Lorenz sought to employ the newest technology of his day—a primitive computer—to carry out Laplace’s program in the limited realm of the weather. That is, if Lorenz supplied his noisy machine with data on the atmospheric conditions of his idealized earth at some given time, it would employ the known laws of meteorology to calculate and print out rows of numbers representing the weather conditions at future times. One day, Lorenz decided he wanted to extend a particular simulation further into the future. Instead of repeating the entire calculation, he decided to take a shortcut by beginning the calculation midway through. To accomplish that, he employed as initial conditions data printed out in the earlier simulation. He expected the computer to regenerate the remainder of the previous simulation and then carry it further. But instead he noticed something strange: the weather had evolved differently. Rather than duplicating the end of the previous simulation, the new one diverged wildly. He soon recognized why: in the computer’s memory the data were stored to six decimal places, but in the printout they were quoted to only three. As a result, the data he had supplied were a tiny bit off. A number like 0.293416, for example, would have appeared simply as 0.293. Scientists usually assume that if the initial conditions of a system are altered slightly, the evolution of that system, too, will be altered slightly. After all, the satellites that collect weather data can measure parameters to only two or three decimal places, and so they cannot even track a difference as tiny as that between 0.293416 and 0.293. But Lorenz found that such small differences led to massive changes in the result. The phenomenon was dubbed the butterfly effect, based on the implication that atmospheric changes so small they could have been caused by a butterfly flapping its wings can have a large effect on subsequent global weather patterns. That notion might sound absurd—the equivalent of the extra cup of coffee you sip one morning leading to profound changes in your life. But actually that does happen—for instance, if the extra time you spent caused you to cross paths with your future wife at the train station or to miss being hit by a car that sped through a red light. In fact, Lorenz’s story is itself an example of the butterfly effect, for if he hadn’t taken the minor decision to extend his calculation employing the shortcut, he would not have discovered the butterfly effect, a discovery which sparked a whole new field of mathematics. When we look back in detail on the major events of our lives, it is not uncommon to be able to identify such seemingly inconsequential random events that led to big changes. Determinism in human affairs fails to meet the requirements for predictability alluded to by Laplace for several reasons. First, as far as we know, society is not governed by definite and fundamental laws in the way physics is. Instead, people’s behavior is not only unpredictable, but as Kahneman and Tversky repeatedly showed, also often irrational (in the sense that we act against our best interests). Second, even if we could uncover the laws of human affairs … it is impossible to precisely know or control the circumstances of life. That is, like Lorenz, we cannot obtain the precise data necessary for making predictions. And third, human affairs are so complex that it is doubtful we could carry out the necessary calculations even if we understood the laws and possessed the data. As a result, determinism is a poor model for the human experience. Or as the Nobel laureate Max Born wrote, “Chance is a more fundamental conception than causality … [A]lthough statistical regularities can be found in social data, the future of particular individuals is impossible to predict, and for our particular achievements, our jobs, our friends, our finances, we all owe more to chance than many people realize … [I]n all except the simplest real-life endeavors unforeseeable or unpredictable forces cannot be avoided, and moreover those random forces and our reactions to them account for much of what constitutes our particular path in life.

In the end, Taleb writes:

I believe that the principal asset I need to protect and cultivate is my deep- seated intellectual insecurity. My motto is “my principal activity is to tease those who take themselves and the quality of their knowledge too seriously.” Cultivating such insecurity in place of intellectual confidence may be a strange aim— and one that is not easy to implement. To do so we need to purge our minds of the recent tradition of intellectual certainties … It certainly takes bravery to remain skeptical; it takes inordinate courage to introspect, to confront oneself, to accept one’s limitations— scientists are seeing more and more evidence that we are specifically designed by mother nature to fool ourselves … I never said that every rich man is an idiot and every unsuccessful person unlucky, only that in absence of much additional information it is preferable to reserve one’s judgment. It is safer.

Also, as Mlodinow concludes:

A path punctuated by random impacts and unintended consequences is the path of many successful people, not only in their careers but also in their loves, hobbies, and friendships. In fact, it is more the rule than the exception … The normal accident theory of life shows not that the connection between actions and rewards is random but that random influences are as important as our qualities and actions … What I’ve learned, above all, is to keep marching forward because the best news is that since chance does play a role, one important factor in success is under our control: the number of at bats, the number of chances taken, the number of opportunities seized. For even a coin weighted toward failure will sometimes land on success. Or as the IBM pioneer Thomas Watson said, “If you want to succeed, double your failure rate.”

In the next and last two essays in this series, we’ll explore another major factor at work in the more random segments of our lives: our emotions.