Randomness -- Part 4

Origins of probability theory and some lingering fallacies.

In this essay, we’ll further explore the role of randomness in people’s lives and focus on some of the history of the development of probability theory, and also explore some probability fallacies we still often fall for today, using Nassim Taleb’s Fooled by Randomness: The Hidden Role of Chance in Life and Markets, and Leonard Mlodinow’s The Drunkard’s Walk: How Randomness Rules Our Lives.

Mlodinow describes the curious case of the history of probability theory:

[T]he Greeks … didn’t have dice. They did have gambling addictions, however. They also had plenty of animal carcasses, and so what they tossed were astragali, made from heel bones. An astragalus has six sides, but only four are stable enough to allow the bone to come to rest on them. Modern scholars note that because of the bone’s construction, the chances of its landing on each of the four sides are not equal: they are about 10 percent for two of the sides and 40 percent for the other two. A common game involved tossing four astragali. The outcome considered best was a rare one, but not the rarest: the case in which all four astragali came up different. This was called a Venus throw. The Venus throw has a probability of about 384 out of 10,000, but the Greeks, lacking a theory of randomness, didn’t know that … [By the time of the Roman Empire] the Roman statesman Cicero, who lived from 106 to 43 B.C. … was perhaps the greatest ancient champion of probability. He employed it to argue against the common interpretation of gambling success as due to divine intervention, writing that the “man who plays often will at some time or other make a Venus cast: now and then indeed he will make it twice and even thrice in succession. Are we going to be so feeble- minded then as to affirm that such a thing happened by the personal intervention of Venus rather than by pure luck?” Cicero believed that an event could be anticipated and predicted even though its occurrence would be a result of blind chance. He even used a statistical argument to ridicule the belief in astrology. Annoyed that although outlawed in Rome, astrology was nevertheless alive and well, Cicero noted that at Cannae in 216 B.C., Hannibal, leading about 50,000 Carthaginian and allied troops, crushed the much larger Roman army, slaughtering more than 60,000 of its 80,000 soldiers. “Did all the Romans who fell at Cannae have the same horoscope?” Cicero asked. “Yet all had one and the same end.” … In the end, Cicero’s principal legacy in the field of randomness is the term he used, probabilis, which is the origin of the term we employ today … In replacing, or at least supplementing, the practice of trial by battle, the Romans sought in mathematical precision a cure for the deficiencies of their old, arbitrary system. Seen in this context, the Roman idea of justice employed advanced intellectual concepts. Recognizing that evidence and testimony often conflicted and that the best way to resolve such conflicts was to quantify the inevitable uncertainty, the Romans created the concept of half proof, which applied in cases in which there was no compelling reason to believe or disbelieve evidence or testimony. In some cases the Roman doctrine of evidence included even finer degrees of proof, as in the church decree that “a bishop should not be condemned except with seventy- two witnesses … a cardinal priest should not be condemned except with forty- four witnesses, a cardinal deacon of the city of Rome without thirty-six witnesses, a subdeacon, acolyte, exorcist, lector, or doorkeeper except with seven witnesses.” To be convicted under those rules, you’d have to have not only committed the crime but also sold tickets. Still, the recognition that the probability of truth in testimony can vary and that rules for combining such probabilities are necessary was a start. And so it was in the unlikely venue of ancient Rome that a systematic set of rules based on probability first arose.

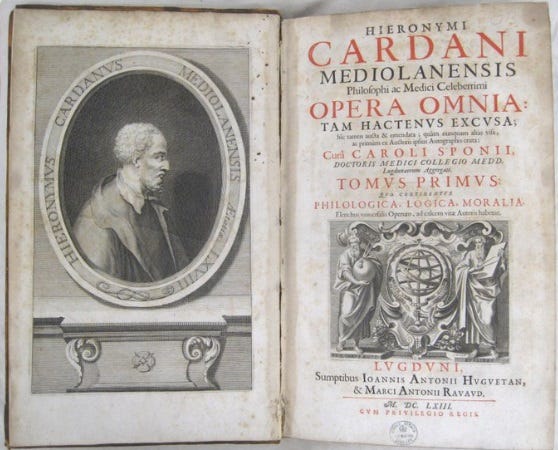

Mlodinow revisits Gerolamo Cardano, whom we met in a previous essay series on the related topic of risk. As Mlodinow writes:

[T]he law of the sample space [is the] framework for analyzing chance situations that was first put on paper in the sixteenth century by Gerolamo Cardano … [In] Cardano’s Book on Games of Chance … there is chapter 14, “On Combined Points” (on possibilities). There Cardano states what he calls “a general rule”— our law of the sample space. The term sample space refers to the idea that the possible outcomes of a random process can be thought of as the points in a space. In simple cases the space might consist of just a few points, but in more complex situations it can be a continuum, just like the space we live in. Cardano didn’t call it a space, however: the notion that a set of numbers could form a space was a century off, awaiting the genius of Descartes, his invention of coordinates, and his unification of algebra and geometry. In modern language, Cardano’s rule reads like this: Suppose a random process has many equally likely outcomes, some favorable (that is, winning), some unfavorable (losing). Then the probability of obtaining a favorable outcome is equal to the proportion of outcomes that are favorable. The set of all possible outcomes is called the sample space. In other words, if a die can land on any of six sides, those six outcomes form the sample space, and if you place a bet on, say, two of them, your chances of winning are 2 in 6 … The potency of Cardano’s rule goes hand in hand with certain subtleties. One lies in the meaning of the term outcomes. As late as the eighteenth century the famous French mathematician Jean Le Rond d’Alembert, author of several works on probability, misused the concept when he analyzed the toss of two coins. The number of heads that turns up in those two tosses can be 0, 1, or 2. Since there are three outcomes, Alembert reasoned, the chances of each must be 1 in 3. But Alembert was mistaken … [A] series of two coin tosses is simple enough that Cardano’s methods are easily applied. The key is to realize that the possible outcomes of coin flipping are the data describing how the two coins land, not the total number of heads calculated from that data, as in Alembert’s analysis. In other words, we should not consider 0, 1, or 2 heads as the possible outcomes but rather the sequences (heads, heads), (heads, tails), (tails, heads), and (tails, tails). These are the 4 possibilities that make up the sample space. The next step, according to Cardano, is to sort through the outcomes, cataloguing the number of heads we can harvest from each. Only 1 of the 4 outcomes—( heads, heads)— yields 2 heads. Similarly, only (tails, tails) yields 0 heads. But if we desire 1 head, then 2 of the outcomes are favorable: (heads, tails) and (tails, heads). And so Cardano’s method shows that Alembert was wrong: the chances are 25 percent for 0 or 2 heads but 50 percent for 1 head. Had Cardano laid his cash on 1 head at 3 to 1, he would have lost only half the time but tripled his money the other half, a great opportunity for a sixteenth- century kid trying to save up money for college— and still a great opportunity today if you can find anyone offering it.

Mlodinow then walks the reader through two of the most basic laws of probability. First, the rule for compounding probability, in which one is looking for the probability that both of two independent events will occur:

That brings us to our next law, the rule for compounding probabilities: If two possible events, A and B, are independent, then the probability that both A and B will occur is equal to the product of their individual probabilities. Suppose a married person has on average roughly a 1 in 50 chance of getting divorced each year. On the other hand, a police officer has about a 1 in 5,000 chance each year of being killed on the job. What are the chances that a married police officer will be divorced and killed in the same year? According to the above principle, if those events were independent, the chances would be roughly 1/ 50 × 1/ 5,000, which equals 1/ 250,000. Of course the events are not independent; they are linked: once you die, darn it, you can no longer get divorced. And so the chance of that much bad luck is actually a little less than 1 in 250,000. Why multiply rather than add? Suppose you make a pack of trading cards out of the pictures of those 100 guys you’ve met so far through your Internet dating service, those men who in their Web site photos often look like Tom Cruise but in person more often resemble Danny DeVito. Suppose also that on the back of each card you list certain data about the men, such as honest (yes or no) and attractive (yes or no). Finally, suppose that 1 in 10 of the prospective soul mates rates a yes in each case. How many in your pack of 100 will pass the test on both counts? Let’s take honest as the first trait (we could equally well have taken attractive). Since 1 in 10 cards lists a yes under honest, 10 of the 100 cards will qualify. Of those 10, how many are attractive? Again, 1 in 10, so now you are left with 1 card. The first 1 in 10 cuts the possibilities down by 1/ 10, and so does the next 1 in 10, making the result 1 in 100. That’s why you multiply. Before we move on, it is worth paying attention to an important detail: the clause that reads if two possible events, A and B, are independent. Suppose an airline has 1 seat left on a flight and 2 passengers have yet to show up. Suppose that from experience the airline knows there is a 2 in 3 chance a passenger who books a seat will arrive to claim it. Employing the multiplication rule, the gate attendant can conclude there is a 2/ 3 × 2/ 3 or about a 44 percent chance she will have to deal with an unhappy customer. The chance that neither customer will show and the plane will have to fly with an empty seat, on the other hand, is 1/ 3 × 1/ 3, or only about 11 percent. But that assumes the passengers are independent. If, say, they are traveling together, then the above analysis is wrong. The chances that both will show up are 2 in 3, the same as the chances that one will show up. It is important to remember that you get the compound probability from the simple ones by multiplying only if the events are in no way contingent on each other.

Mlodinow then discusses the rule for calculating the probability that one event or another event will occur:

There are situations in which probabilities should be added, and that is our next law. It arises when we want to know the chances of either one event or another occurring, as opposed to the earlier situation, in which we wanted to know the chance of one event and another event both happening. The law is this: If an event can have a number of different and distinct possible outcomes, A, B, C, and so on, then the probability that either A or B will occur is equal to the sum of the individual probabilities of A and B, and the sum of the probabilities of all the possible outcomes (A, B, C, and so on) is 1 (that is, 100 percent). When you want to know the chances that two independent events, A and B, will both occur, you multiply; if you want to know the chances that either of two mutually exclusive events, A or B, will occur, you add.

Despite these advances in probability calculations, today, we often fall for various fallacies that rely on an under-appreciation of the role of randomness. Taleb describes the “hot hand” fallacy:

The “hot hand in basketball” is another example of misperception of random sequences: It is very likely in a large sample of players for one of them to have an inordinately lengthy lucky streak. As a matter of fact it is very unlikely that an unspecified player somewhere does not have an inordinately lengthy lucky streak. This is a manifestation of the mechanism called regression to the mean. I can explain it as follows: Generate a long series of coin flips producing heads and tails with 50% odds each and fill up sheets of paper. If the series is long enough you may get eight heads or eight tails in a row, perhaps even ten of each. Yet you know that in spite of these wins the conditional odds of getting a head or a tail is still 50%.

Mlodinow describes errors people sometimes make in failing to consider a “regression to the mean”:

In the mid- 1960s, [Daniel] Kahneman, then a junior psychology professor at Hebrew University, agreed to perform a rather unexciting chore: lecturing to a group of Israeli air force flight instructors on the conventional wisdom of behavior modification and its application to the psychology of flight training. Kahneman drove home the point that rewarding positive behavior works but punishing mistakes does not. One of his students interrupted, voicing an opinion that would lead Kahneman to an epiphany and guide his research for decades. “I’ve often praised people warmly for beautifully executed maneuvers, and the next time they always do worse,” the flight instructor said. “And I’ve screamed at people for badly executed maneuvers, and by and large the next time they improve. Don’t tell me that reward works and punishment doesn’t work. My experience contradicts it.” The other flight instructors agreed. To Kahneman the flight instructors’ experiences rang true. On the other hand, Kahneman believed in the animal experiments that demonstrated that reward works better than punishment. He ruminated on this apparent paradox. And then it struck him: the screaming preceded the improvement, but contrary to appearances it did not cause it. How can that be? The answer lies in a phenomenon called regression toward the mean. That is, in any series of random events an extraordinary event is most likely to be followed, due purely to chance, by a more ordinary one. Here is how it works: The student pilots all had a certain personal ability to fly fighter planes. Raising their skill level involved many factors and required extensive practice, so although their skill was slowly improving through flight training, the change wouldn’t be noticeable from one maneuver to the next. Any especially good or especially poor performance was thus mostly a matter of luck. So if a pilot made an exceptionally good landing— one far above his normal level of performance— then the odds would be good that he would perform closer to his norm— that is, worse— the next day. And if his instructor had praised him, it would appear that the praise had done no good. But if a pilot made an exceptionally bad landing— running the plane off the end of the runway and into the vat of corn chowder in the base cafeteria— then the odds would be good that the next day he would perform closer to his norm— that is, better. And if his instructor had a habit of screaming “you clumsy ape” when a student performed poorly, it would appear that his criticism did some good. In this way an apparent pattern would emerge: student performs well, praise does no good; student performs poorly, instructor compares student to lower primate at high volume, student improves. The instructors in Kahneman’s class had concluded from such experiences that their screaming was a powerful educational tool. In reality it made no difference at all.

Mlodinow describes the “gambler’s fallacy,” which involves random chance events (unlike regressions to the mean):

Another mistaken notion connected with the law of large numbers is the idea that an event is more or less likely to occur because it has or has not happened recently. The idea that the odds of an event with a fixed probability increase or decrease depending on recent occurrences of the event is called the gambler’s fallacy. For example, if [someone] landed, say, 44 heads in the first 100 tosses, the coin would not develop a bias toward tails in order to catch up! That’s what is at the root of such ideas as “her luck has run out” and “He is due.” That does not happen. For what it’s worth, a good streak doesn’t jinx you, and a bad one, unfortunately, does not mean better luck is in store. Still, the gambler’s fallacy affects more people than you might think, if not on a conscious level then on an unconscious one. People expect good luck to follow bad luck, or they worry that bad will follow good.

Then there is the “prosecutor’s fallacy”:

The renowned attorney and Harvard Law School professor Alan Dershowitz also successfully employed the prosecutor’s fallacy— to help defend O. J. Simpson in his trial for the murder of Simpson’s ex- wife, Nicole Brown Simpson, and a male companion. The trial of Simpson, a former football star, was one of the biggest media events of 1994– 95. The police had plenty of evidence against him. They found a bloody glove at his estate that seemed to match one found at the murder scene. Bloodstains matching Nicole’s blood were found on the gloves, in his white Ford Bronco, on a pair of socks in his bedroom, and in his driveway and house. Moreover, DNA samples taken from blood at the crime scene matched O. J.’s. The defense could do little more than accuse the Los Angeles Police Department of racism— O.J. is African American— and criticize the integrity of the police and the authenticity of their evidence. The prosecution made a decision to focus the opening of its case on O. J.’s propensity toward violence against Nicole. Prosecutors spent the first ten days of the trial entering evidence of his history of abusing her and claimed that this alone was a good reason to suspect him of her murder. As they put it, “a slap is a prelude to homicide.” The defense attorneys used this strategy as a launchpad for their accusations of duplicity, arguing that the prosecution had spent two weeks trying to mislead the jury and that the evidence that O.J. had battered Nicole on previous occasions meant nothing. Here is Dershowitz’s reasoning: 4 million women are battered annually by husbands and boyfriends in the United States, yet in 1992, according to the FBI Uniform Crime Reports, a total of 1,432, or 1 in 2,500, were killed by their husbands or boyfriends. Therefore, the defense retorted, few men who slap or beat their domestic partners go on to murder them. True? Yes. Convincing? Yes. Relevant? No. The relevant number is not the probability that a man who batters his wife will go on to kill her (1 in 2,500) but rather the probability that a battered wife who was murdered was murdered by her abuser. According to the Uniform Crime Reports for the United States and Its Possessions in 1993, the probability Dershowitz (or the prosecution) should have reported was this one: of all the battered women murdered in the United States in 1993, some 90 percent were killed by their abuser. That statistic was not mentioned at the trial … Dershowitz may have felt justified in misleading the jury because, in his words, “the courtroom oath—‘to tell the truth, the whole truth and nothing but the truth’— is applicable only to witnesses. Defense attorneys, prosecutors, and judges don’t take this oath … indeed, it is fair to say the American justice system is built on a foundation of not telling the whole truth.”

Taleb describes the “small world” fallacy this way:

[M]isconception of probabilities arises from the random encounters one may have with relatives or friends in highly unexpected places. “It’s a small world!” is often uttered with surprise. But these are not improbable occurrences— the world is much larger than we think. It is just that we are not truly testing for the odds of having an encounter with one specific person, in a specific location at a specific time. Rather, we are simply testing for any encounter, with any person we have ever met in the past, and in any place we will visit during the period concerned. The probability of the latter is considerably higher, perhaps several thousand times the magnitude of the former.

Taleb describes how, in the past, and perhaps even today, people can be conned by those who exploit our ignorance of randomness:

You get an anonymous letter on January 2 informing you that the market will go up during the month. It proves to be true, but you disregard it owing to the well-known January effect (stocks have gone up historically during January). Then you receive another one on February 1 telling you that the market will go down. Again, it proves to be true. Then you get another letter on March 1— same story. By July you are intrigued by the prescience of the anonymous person and you are asked to invest in a special offshore fund. You pour all your savings into it. Two months later, your money is gone. You go spill your tears on your neighbor’s shoulder and he tells you that he remembers that he received two such mysterious letters. But the mailings stopped at the second letter. He recalls that the first one was correct in its prediction, the other incorrect. What happened? The trick is as follows. The con operator pulls 10,000 names out of a phone book. He mails a bullish letter to one half of the sample, and a bearish one to the other half. The following month he selects the names of the persons to whom he mailed the letter whose prediction turned out to be right, that is, 5,000 names. The next month he does the same with the remaining 2,500 names, until the list narrows down to 500 people. Of these there will be 200 victims. An investment in a few thousand dollars’ worth of postage stamps will turn into several million.

As Taleb writes, many conspiracy theories also rely on people’s failures to appreciate randomness:

I can create a conspiracy theory by downloading hundreds of paintings from an artist or group of artists and finding a constant among all those paintings (among the hundreds of thousand of traits). I would then concoct a conspiratorial theory around a secret message shared by these paintings.

Lots of conspiracy theories are bandied about by alleged “experts” on television. Taleb amusingly writes:

[T]he accomplishment from which I derive the most pride is my weaning myself from television and the news media. I am currently so weaned that it actually costs me more energy to watch television than to perform any other activity, like, say, writing this book. But this did not come without tricks. Without tricks I would not escape the toxicity of the information age. In the trading room of my company, I have the television set turned on all day with the financial news channel CNBC staging commentator after commentator and CEO after CEO murdering rigor all day long. What is the trick? I have the volume turned completely off. Why? Because when the television set is silent, the babbling person looks ridiculous, exactly the opposite effect as when the sound is on. One sees a person with moving lips and contortions in his facial muscles, taking themselves seriously—but no sound comes out. We are visually but not auditorily intimidated, which causes a dissonance. The speaker’s face expresses some excitement, but since no sound comes out, the exact opposite is conveyed. This is the sort of contrast the philosopher Henri Bergson had in mind in his Treatise on Laughter, with his famous description of the gap between the seriousness of a gentleman about to walk on a banana skin and the comical aspect of the situation. Television pundits lose their intimidating effect -- they even look ridiculous. They seem to be excited about something terribly unimportant. Suddenly pundits become clowns.

In the next essay in this series, we’ll explore the randomness of aesthetics.