The most sophisticated computers work on a simple system of on and off switches, with each switch, or combination of switches, communicating the information computers process and turn into words, calculations, and images. These on and off switches transmit electrical impulses indicating the switch is either on or off, and the greater number of impulses transmitted, the more powerful the processing power of the computer. In microchips, these electrical impulses are transmitted between little metal nodes embedded in the chip, and so the more tiny metal nodes you can fit on a chip, the more impulses can be sent at the same time, increasing processing power.

As Simon Winchester writes in his book The Perfectionists: How Precision Engineers Created the Modern World:

And so the near-exponential process of chips becoming ever tinier and ever more precise got under way … [T]he smaller the transistor, the less electricity needed to make it work, and the faster it could operate—and so, on that level, its operations were cheaper, too … Place a thousand transistors onto a single line of silicon, and then square it, and without significant additional cost, you produce a chip with a million transistors. It is a business plan without any obvious disadvantage.

Gordon Moore was the head of Fairchild Semiconductors in the 1960’s, and he predicted computer processing power would double every couple of years, and so it has. As Winchester continues:

The numbers are beyond incredible. There are now more transistors at work on this planet (some 15 quintillion, or 15,000,000,000,000,000,000) than there are leaves on all the trees in the world. In 2015, the four major chip-making firms were making 14 trillion transistors every single second. Also, the sizes of the individual transistors are well down into the atomic level. Node size has shrunk almost exactly as Gordon Moore predicted it would. In 1971, the transistors on the Intel 4004 were ten microns apart—a space only about the size of a droplet of fog separated each one of the 2,300 transistors on the board. By 1985, the nodes on an Intel 80386 chip had come down to one micron, the diameter of a typical bacterium. By 1985, processors typically had more than a million transistors.

This processing power, combined with increasing precision in measuring time, has given us the GPS we use every day, and its development is another story worth telling.

Once satellites were orbiting the earth, people figured out that if an observer on the ground could establish with precision the position of a satellite in space, then satellites could be used to do the opposite, namely locate an object below from space (much like earlier sailors used the North Star to determine their own position on the sea).

First, an experiment was done using simple signal transmissions from a car to another location with the understanding, thanks to Einstein, that the speed of light (which is the speed at which electromagnetic waves travel) registers the same speed from any perspective and between any moving objects. As Winchester explains:

in 1973, a Vermont country doctor’s son, Roger Easton, came up with something … It involved the question of time, and of the clocks that record its passage. Indeed, the physical principle involved is known as passive ranging, and in its essence, it is disconcertingly simple. As the distance between the car and the office increased, so did the discrepancy between the two numbers, and it did so solely because of the distance, as all else (the frequencies of the two devices and the speed of signal transmission, the speed of light) was constant. The navy officers watched, fascinated. As the calculations came in, more or less instantly, they could tell exactly how far away [the] car was, how fast he was going, and when he changed direction. They noted with particular admiration and frank astonishment as the number changed noticeably at the one point when Maloof, now driving scores of miles away, changed lanes. The demonstration was a consummate success: in principle, clock-difference navigation systems were shown to work, and far more easily than anyone had imagined.

Judging precise distance, under this method, relies on incredibly precise clocks, which brings us back to the topic we explored in the first two essays of this series. And clocks have become very, very precise. That’s because James Clerk Maxwell discovered there are things at the atomic and subatomic level that vibrate at frequencies which never change at all, or at least to any degree we can measure even today. As Winchester writes:

It had been known since the nineteenth century that when an electron performs this leap back to its ground state from a higher energy level, it emits a highly stable burp of electromagnetic radiation. The radiation from such an atomic transition, it was said by many physicists, was so exact and so stable that it might well one day be used as the basis of a clock. The first true atomic clock was invented by a Briton, Louis Essen, in 1955, when he and a colleague, Jack Parry, made a model and used as its heartbeat the transition of electrons orbiting the nucleus of atoms of the metal cesium … [The atomic clock] now has a use and value beyond all measure, since in transition it emits radiation at such a steady and unvarying beat that the scientists at Sèvres readily agreed, in 1967, and after much badgering by Louis Essen and Britain’s National Physical Laboratory, where he worked, that it be used as the basis for a new definition of the second. As it remains today. The definition of the second today is quite simply, if simply be the word, the duration of 9,192,631,770 cycles at the microwave frequency of the spectral line corresponding to the transition between two hyperfine energy levels of the ground state of cesium 133 … [Today] the second could be measured to a known degree of precision of 2.3 × 10−16, or 0.000 000 000 000 000 23. This means it would neither lose nor gain a second in 138 million years. Now there is talk of quantum logic clocks and optical clocks that deliver even more remarkable figures, one with a claimed accuracy of 8.6 × 10−18, meaning that time would be kept impeccably for a billion years.

Accuracy in measuring time is now so precise that the official measurement of length is now defined as the length of the path traveled by light in a vacuum during a time interval of 1/299,792,458 of a second. Which brings us back to GPS.

With satellites in space, and atomic clocks providing precise timing, a method called trilateration could be used to locate the precise location of things on Earth. As the Federal Aviation Administration explains:

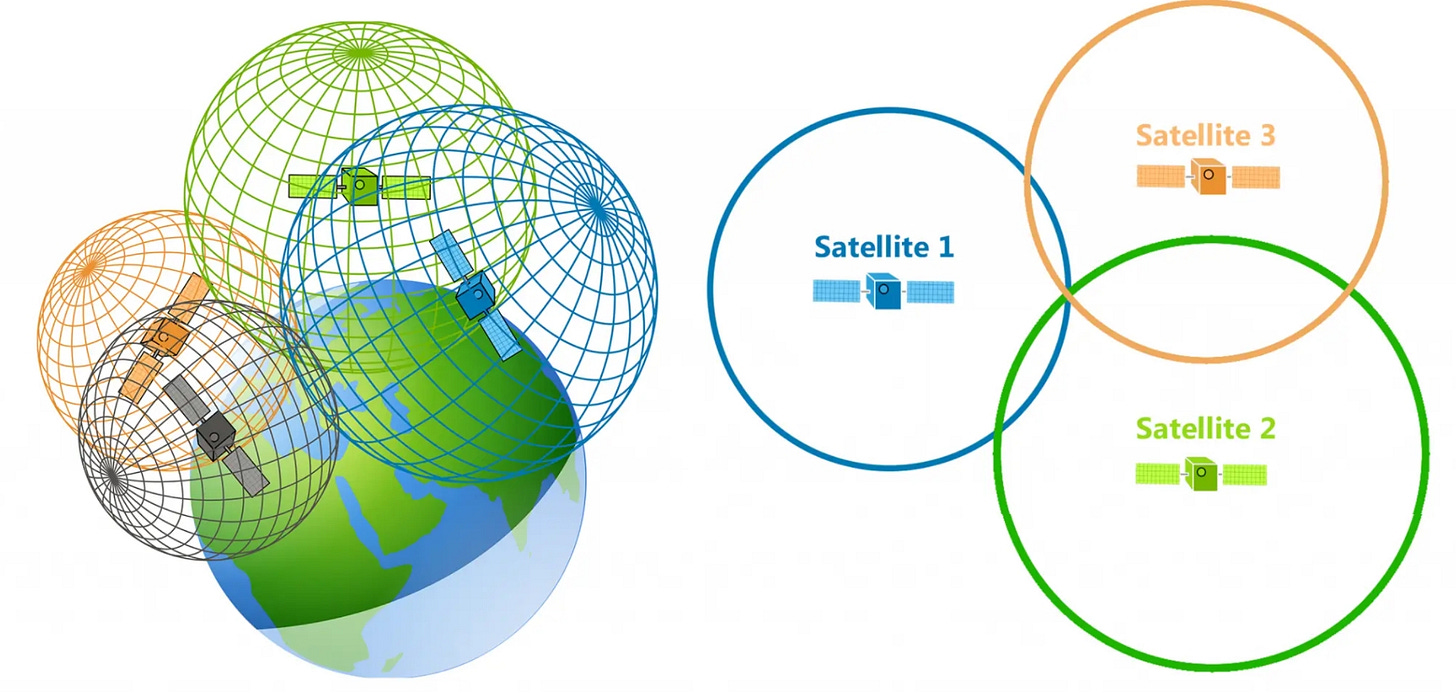

GPS satellites carry atomic clocks that provide extremely accurate time. The time information is placed in the codes broadcast by the satellite so that a receiver can continuously determine the time the signal was broadcast. The signal contains data that a receiver uses to compute the locations of the satellites and to make other adjustments needed for accurate positioning. The receiver uses the time difference between the time of signal reception and the broadcast time to compute the distance, or range, from the receiver to the satellite. The receiver must account for propagation delays or decreases in the signal's speed caused by the ionosphere and the troposphere. With information about the ranges to three satellites and the location of the satellite when the signal was sent, the receiver can compute its own three-dimensional position. An atomic clock synchronized to GPS is required in order to compute ranges from these three signals. However, by taking a measurement from a fourth satellite, the receiver avoids the need for an atomic clock. Thus, the receiver uses four satellites to compute latitude, longitude, altitude, and time.

Essentially, the four satellites create a system of rings (each ring is made by the signal propagating from the satellites in all directions), and where all the rings intersect with the receiver (your cell phone), that’s where you are.

An instructional video explaining this in more detail can be found here.

So through math and clocks and guns came just about everything else we enjoy as consumer and other goods today. But it all relied on increasing precision.

Links to all essays in this series: Part 1; Part 2; Part 3; Part 4