It’s important to recognize that attempts at measurement can narrow focus instead of broadening it. As James Vincent writes in his book Beyond Measure: The Hidden History of Measurement from Cubits to Quantum Constants:

To measure is to choose; to focus your attention on a single attribute and exclude all others. The word “precision” itself comes from the Latin praecisio, meaning “to cut off,” and so, by examining how and where measurement is applied, we can investigate our own impulses and desires.

Measurement, then, can be a way of focusing attention to one set of things (those that are measured), and thereby obscuring other things (those that aren’t being measured). And politicians, whose electoral supporters will necessarily adhere to a partisan policy platform, will tend to promote measurements that focus on their preferred political outcomes. As Vincent writes:

As the historian Emanuele Lugli has noted, units of measurement are, for the powerful, “sly tools of subjugation.” Each time they’re deployed, they turn the world “into a place that continues to make sense as long as the power that legitimises the measurements rests in place.” In other words: measurement does not only benefit from authority– it creates it too.

Part of what makes two-party politics so divisive, and ineffective in many ways, is its tendency to install in office people who will choose to selectively measure things along party lines. As Erica Thompson writes in her book Escape from Model Land: How Mathematical Models Can Lead Us Astray and What We Can Do About It:

Stylised facts are perhaps most like cartoons or caricatures, where some recognisable feature is overemphasised beyond the lifelike in order to capture some unique aspect that differentiates one particular individual from most others. Political cartoonists pick out prominent features such as ears (Barack Obama) or hair (Donald Trump) to construct a grotesque but immediately recognisable caricature of the subject … We might think of models as being caricatures in the same sense. Inevitably, they emphasise the importance of certain kinds of feature -- perhaps those that are the most superficially recognisable -- and ignore others entirely … Thinking of models as caricatures helps us to understand how they both generate and help to illustrate, communicate and share insights. Thinking of models as stereotypes hints at the more negative aspects of this dynamic: in constructing this stereotype, what implicit value judgements are being made?

Models that intentionally fail to measure important information on partisan grounds can act like gilded cages from which their politically tribal adherents can’t escape. As Thompson writes:

Though Model Land is easy to enter, it is not so easy to leave. Having constructed a beautiful, internally consistent model and a set of analysis methods that describe the model in detail, it can be emotionally difficult to acknowledge that the initial assumptions on which the whole thing is built are not literally true.

This has high risks. As Thompson writes:

In the gap between Model Land [her term for whatever model one’s looking at] and the real world lie unquantifiable uncertainties: is this model structurally correct? Have I taken account of all relevant variables? Am I influencing the system by measuring and predicting it?

Thompson writes about two economists, Walter Newlyn and Bill Phillips, who in 1949 created a physical model of the economy with tubes that contained flowing water, which represented the movement of money through the economic system:

The Newlyn-Phillips machine, also called the MONIAC (Monetary National Income Analogue Calculator), conceptualises and physically represents money as liquid, created by credit (or export sales) and circulated around the economy at rates depending on key model parameters like the household savings rate and levels of taxation. The key idea here is that there are “stocks” of money in certain nominal locations (banks, government, etc), modelled as tanks that can be filled or emptied, and “flows” of money into and out of those tanks at varying rates. The rate of flow along pipes is controlled by valves which in turn are controlled by the level of water in the tanks. Pumps labelled “taxation” return water into the tank representing the national treasury. The aim is to set the pumps and valves in a way that allows for a steady-state solution, i.e., a closed loop that prevents all of the water ending up in one place and other tanks running dry. In this way a complex system of equations is represented and solved in physical form … [T]his machine does not seem to have been much used directly for predictive purposes, but performed a more pedagogical role in illustrating and making visible the relationship between different aspects of the economy.

The machine also showed the limits of all models:

The Phillips-Newlyn machine provided a new and valuable perspective, but it also inherited biases, assumptions and blind spots from the pre-existing equation set it was designed to represent. Only certain kinds of questions can be asked of this model, and only certain types of answers can be given. You can vary the parameter called “household savings rate” and observe the “healthcare expenditure,” but you cannot vary a parameter called “tax evasion” or observe “trust in government.” … Just as you cannot ask the Newlyn-Phillips machine about tax evasion, you also cannot ask a physical climate model about the most appropriate price of carbon, nor can you ask an epidemiological model whether young people would benefit more from reduced transmission of a virus or from access to in-person education.

And just like models, “experts” in various fields are limited by their own expertise. Dr. Anthony Fauci, for example, during a Senate hearing on the COVID-19 pandemic, when asked about the larger costs of the restrictions he was recommending, said “I’m a scientist, a physician, and a public health official. I give advice, according to the best scientific evidence. There are a number of other people who come into that and give advice that are more related to the things that you spoke about, the need to get the country back open again, and economically. I don’t give advice about economic things. I don’t give advice about anything other than public health.”

In 2023, the former National Institutes of Health chief Francis Collins, who served during the COVID pandemic, has since publicly admitted this error, at least in part, saying “If you’re a public-health person and you’re trying to make a decision, you have this very narrow view of what the right decision is, and that is something that will save a life. So you attach infinite value to stopping the disease and saving a life. You attach a zero value to whether this actually totally disrupts people’s lives, ruins the economy, and has many kids kept out of school in a way that they never quite recovered.” This, he explained, “is a public-health mindset,” which was “another mistake we made.”

Dr. Fauci’s testimony in that instance shows why models should have faces on them. What I mean is that, too often, policymakers point to mathematical “models” in the abstract to justify their preferred public policies, without addressing the inherent limitations of the models themselves. But for every model, the “experts” on the same model (those who created the model) should be named, so questions about the model can be addressed to them. As Thompson writes:

Distinguishing between Model Land and real world would reduce the sensationalism of some headlines and would also encourage scientific results to clarify more clearly where or whether they are expected to apply to the real world as well. Say we want to modify a headline like “Planet-Killer Asteroid Will Hit Earth Next Year” to acknowledge the scientific uncertainty. Instead of changing it to “Models Predict That Planet-Killer Asteroid Will Hit Earth Next Year,” which leaves the reader no wiser about whether it will actually happen or not, we could change it to “Astronomers Predict That Planet-Killer Asteroid Will Hit Earth Next Year.” If they turn out to be wrong, at least there is a named person rather than a faceless model there to explain the mistake. In order to have this kind of accountability, we need not only the model and its forecast, but also someone to take responsibility for the step out of Model Land into the real world … Then the question of adequacy-for-purpose does not solely relate to the model, but also to the expert. Are they also adequate for purpose? How do we know?

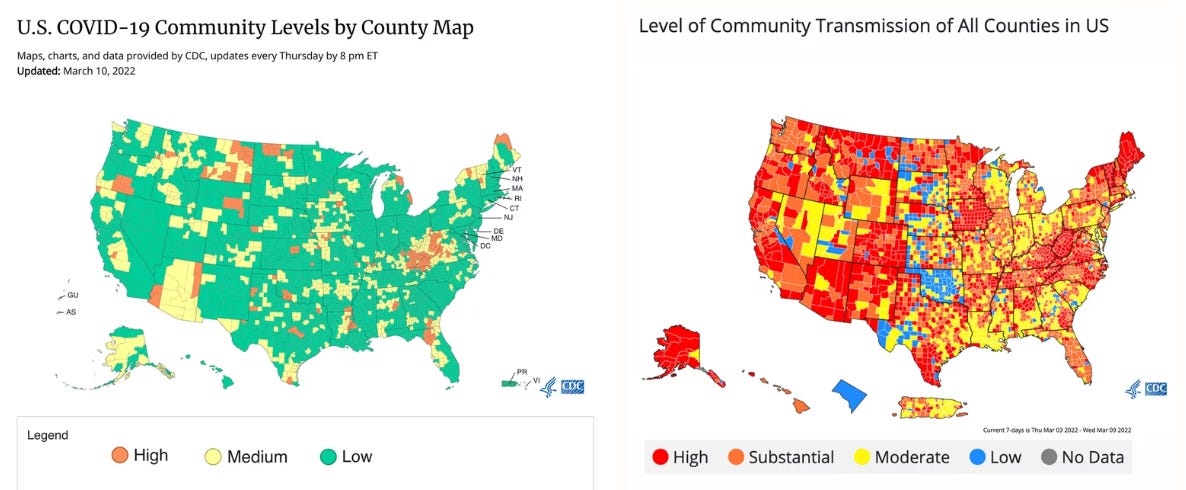

For most of the COVID-19 pandemic, the Centers for Disease Control used a flawed and overly broad metric on which to base their recommended restrictions, namely the metric of the rate of community spread of the virus. But that metric failed to focus on what’s much more important to most people, namely the rate of hospitalizations (and deaths) due to COVID. After all, if a virus spreads a lot, but results in relatively few hospitalizations, it should be less of a concern. And indeed, the CDC ultimately came around to focusing on rates of hospitalization rather than community spread not long after a prominent gubernatorial election in Virginia resulted in a surprise upset in which the Republican candidate who supported easing COVID-related restrictions was elected Governor of Virginia. As National Public Radio reported in March, 2022:

Cindy Watson would like some clarity from the Centers for Disease Control and Prevention. Madison County, Iowa, where she lives, is categorized as having "low" COVID levels on the agency's new lookup tool for COVID-19 Community Levels – it's even colored an inviting green on the map. But when she looked at the agency's existing map of COVID transmission levels, the same county – and much of the country – was bright red and classified as "high." In fact, Watson was among the millions of Americans who were instantly transported to a green zone in late February, when the agency unveiled its new metrics and map. That color change wasn't simply symbolic. It came with new guidance that said Watson — and anyone else living somewhere green or yellow — could stop wearing masks in public … Up until late February, the CDC based its rankings of a county's level of risk on the amount of virus spreading there and what portion of lab tests were found to be positive. The new framework instead focuses on the situation in hospitals — how many people are being admitted for COVID-19 and how much capacity is left.

There’s also reason to think COVID-related death counts are significantly overstated due to influences on counting methods. As relayed in the Wall Street Journal:

Public-health experts are increasingly acknowledging what has long been obvious: America is overcounting hospitalizations and deaths from Covid-19. Hospital patients are routinely tested for Covid on admission, then counted as “Covid hospitalizations” even if they’re asymptomatic. When patients die, Leana Wen notes in a Washington Post column, Covid is often listed on their death certificates even if it played no part in killing them. Government programs create incentives to overestimate Covid’s toll, and poor data make it difficult to pinpoint who’s still at risk and how effective boosters are. To the Centers for Disease Control and Prevention, a positive Covid test is enough to identify a “Covid hospitalization.” … The CDC Data Tracker also uses state-generated data in reporting hundreds of daily Covid deaths. Many states report a “Covid death” anytime the decedent had a positive PCR test in the month or two before dying. The National Center for Health Statistics, a CDC subdivision, uses death certificates, which are more reliable. But death certificates have problems of their own, in part because government policies create incentives to overcount. Under the federal public-health emergency, which begins its fourth year on Friday, hospitals get a 20% bonus for treating Medicare patients diagnosed with Covid-19. That made sense at the beginning of the pandemic, when hospitals were swamped with seriously ill patients, subjected to arduous protocols and losing money from canceled elective procedures. It’s irrational under current circumstances … Another incentive to overcount comes from the American Rescue Plan of 2021, which authorizes the Federal Emergency Management Agency to pay Covid-19 death benefits for funeral services, cremation, caskets, travel and a host of other expenses. The benefit is worth as much as $9,000 a person or $35,000 a family if multiple members die. By the end of 2022, FEMA had paid nearly $2.9 billion in Covid-19 death expenses. Funeral homes, mortuaries and state health departments widely advertise this benefit on their websites, as does FEMA. By contrast, the agency barely advertises funeral assistance to hurricane victims. And while around 80% of requests for Covid death benefits had been approved as of Jan. 1, some 80% of applications following hurricanes Harvey, Irma and Maria in 2017 were denied. FEMA offers no death benefit for the flu, HIV or any other infectious disease. Several physicians told us they are concerned that hospitals are being pressured by families to list Covid-19 on the death certificate. “Just try and leave Covid off the death certificate of a person who was asymptomatic positive and died in a car accident,” one infectious-disease doctor said. “Just try.” No one was willing to be quoted by name—unsurprisingly, since the implication is that their hospitals are falsifying death certificates. These programs create a vicious circle. They establish incentives to overstate the danger of Covid. The overstatement provides a justification to continue the state of emergency, which keeps the perverse incentives going. With effective vaccines and treatments widely available, and an infection fatality rate on par with flu, it’s past time to recognize that Covid is no longer an emergency requiring special policies.

These death counts, of course, were used to justify school closures, among other things, which have their own significant downsides:

The [Wall Street] Journal’s Ben Chapman and Douglas Belkin report on one massive cost of this disaster: a Stanford study showing a possible lifetime earnings decline of $70,000 for children resulting from Covid-era learning losses, especially in math: “If the learning losses aren’t recovered, K-12 students on average will grow into less educated, lower-skilled and less productive adults and will earn 5.6% less over the course of their lives than students educated just before the pandemic, said Eric A. Hanushek, a Stanford University economist who specializes in education. He said the losses could total $28 trillion over the rest of this century ...” Dr. Hanushek’s analysis echoes a study released in October by researchers from Harvard and Dartmouth Universities, which estimated that if the learning loss isn’t reversed, it would equate to a 1.6% drop in lifetime earnings for the average K-12 student. That study also found learning loss leads to lower high school graduation rates and college enrollment as well as higher teen motherhood, arrests and incarceration. The Journal reporters have more on the math disaster: “Nationwide, the percentage of eighth-grade students who failed to attain basic levels of math skills on the exam grew to 38% from 31% before the pandemic.”

Researchers at the University of California, San Francisco, also found that the Centers for Diseases control also made statistical errors in dozens of announcements, and those errors almost always exaggerated the risks described.

In the next essay in this series, we’ll explore how well the experts who have cited various models of “climate change” have fared regarding the accuracy of their (and their models’) predictions.